In FINDHR research, technology, law, and ethics are interwoven. Our Lab is leading the cross-cultural digital ethics theme, and our partners have an expertise in a wide variety of domains ‒ e.g., data protection law, non-discrimination law; intersectional gender analysis; algorithmic fairness and explainability research; expertise on privacy and responsible data practices and models; auditing of digital services. In particular, we consult on cross-cultural product design expertise, for fair treatment of marginalised groups. We are currently exploring the socio-ethical implications of data de-biasing and fairness in synthetic data generation, and the potentials of AI-driven technologies to shape and transform the future of work.

Select Outputs

Training on Reducing AI bias and discrimination

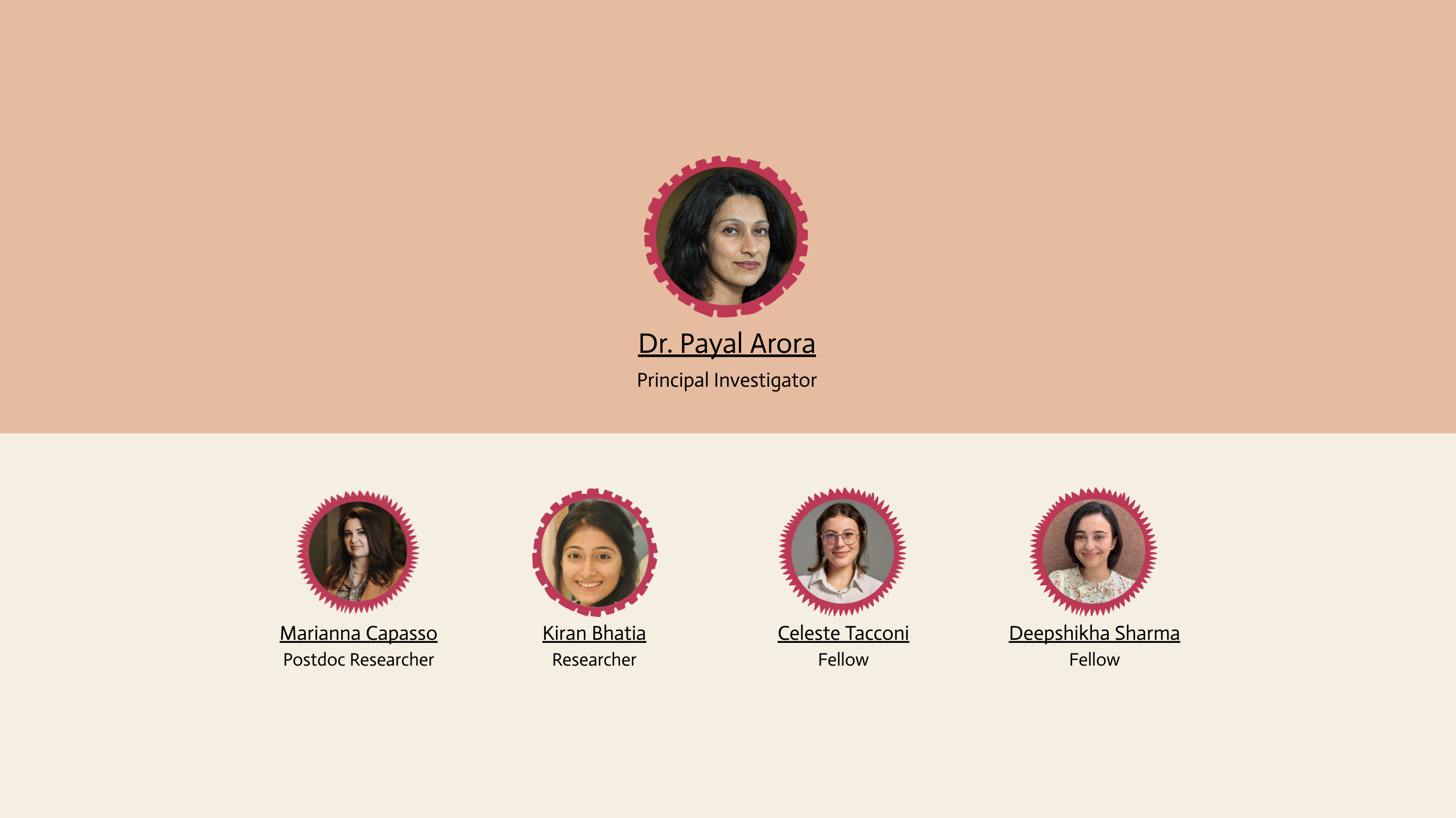

Marianna Capasso, Payal Arora, Deepshikha Sharma and Celeste Tacconi: On the Right to Work in the Age of Artificial Intelligence: Ethical Safeguards in Algorithmic Human Resource Management The Business and Human Rights Journal 2025

Philippe de Wilde, Payal Arora, Fernando Buarque, Yik Chan Chin,

Mamello Thinyane, Stinckwich Serge, Fournier-Tombs Eleonore and Marwala

Tshilidzi. Recommendations on the Use of Synthetic Data to Train AI Models : UNU Centre, UNU-CPR, UNU Macau, 2024.